AI’s advanced systems and algorithms may process data faster than humans, but more indiscriminately. Its efficiency makes it a tempting tool for entrepreneurs and executives eager to streamline their operations, cut costs or gain a competitive edge. However, ethical cracks show in your systems when you give artificial intelligence free rein in decision-making processes.

Overreliance Without Understanding

AI tools often appear foolproof on the surface. Trusting them unquestioningly can be dangerous, especially when users don’t fully understand conclusions or how those systems reach them because of a lack of transparency in the decision-making process. The complexity of deep learning, where neural networks process data in layers, means outcomes are often untraceable, even to developers.

The more businesses rely on AI to guide decisions, the higher the risk of significant missteps when the algorithm goes wrong. This is especially harmful in industries like health care, finance or recruitment, where machine-driven decisions have the power to affect lives negatively. Machine outputs can be misleading, discriminatory and flat-out wrong.

The Illusion of Expertise

Most people who claim to “use AI” are experimenting, not engineering. While many organizations report a high level of AI adoption, the reality is that employees may say they’re confident in using it, but only 12% of staff have actual working experience using artificial intelligence technologies. This gap between perception and reality feeds into misplaced trust.

When teams don’t understand how a model is trained or what data it draws on, they’re unlikely to challenge its outputs — even when something feels off. That false sense of competence creates openings for unethical use, unconscious bias and missed red flags.

Trusting Black Boxes

Artificial intelligence technologies seem to be developing faster than humans can keep track, and systems built on large neural networks with deep learning algorithms are known as “black boxes” with obscure inner workings. Yet companies increasingly treat system outputs as objective.

That’s the real problem. You can’t evaluate fairness or legality if you don’t know how machines decide on a recommendation or action. The lack of transparency makes it harder to contest outcomes, explain actions to stakeholders or spot system errors because of faulty reasoning.

Accountability Is Still a Human Characteristic

A machine can recommend, but it can’t be held accountable. Even when AI drives a decision, the human behind the development must answer for its impact. This applies to business leaders, developers and anyone integrating AI into workflows.

Oversight defines who’s responsible, how decisions are reached, how performance is audited and what happens when things go wrong. Clear accountability frameworks are essential and have already been suggested in clinical settings. Businesses expose themselves to reputational, financial and legal risk without that structure.

Biased Datasets — Compromised Results

Algorithms reflect the data they’re trained on, and most historical datasets are riddled with bias, and sets compiled by humans also contain biases. Whether it’s underrepresentation in training samples or skewed outcomes from decades of discrimination, AI can amplify existing inequalities rather than correct them.

Even well-intending systems can perpetuate harm. Without proactive testing, refinement and diversified development teams, bias seeps in quietly and becomes normalized.

Ethical Data Use and Security

AI doesn’t just process public data. It often absorbs sensitive personal information, sometimes without consent, while also leaving watermarking or embedded signals on the output. Poorly secured data pipelines can open businesses to sophisticated cyber attacks, legal violations, competitive risk and exploitation.

When proprietary or customer data gets fed into large-scale models, it’s not always clear where that information ends up or who has access. One slip could be catastrophic in sectors with regulatory burdens, such as health care or finance.

Guardrails for Ethical Use and Decision-Making

AI isn’t inherently unethical. The challenge is in its application, and whether organizations put guidelines and quality checks in place. An example of an ethical AI application is “Constitutional AI” as used by Claude AI with greater responsibility.

Human oversight is essential to ensure that outputs are ethical, legal and aligned with company values. These controlling steps aren’t just best practices — they’re survival strategies in an increasingly AI-reliant world.

To use AI responsibly, businesses should:

- Establish oversight thresholds for when human review is required.

- Run system performance audits regularly to catch bias or drift.

- Maintain audit trails to show the decision-making steps to assist with accountability.

- Define what accountability looks like, so responsibility doesn’t get passed to the machine.

- Prioritize transparency in internal processes and customer-facing policies.

Use AI With Eyes Wide Open

AI should support decision-making, not replace it. It’s a powerful tool, but not a moral agent. The smarter your systems get, the more important human ethics, transparency and accountability become.

If you’re relying on AI to help you make business-critical decisions, you can’t afford to let convenience override caution. Ask how your systems work, and question their inputs and outputs. Make sure there’s always a person — not a black box — behind your company’s choices.

FAQ

Is It Ethical to Use AI in Decision-Making at All?

With responsible design, transparent processes and human oversight, using AI in decision-making and automation can be ethically sustainable. Ethical use depends on how AI is integrated and monitored.

What Is the Biggest Ethical Risk of Using AI?

Unjustified trust in opaque systems that may carry hidden bias leads to unfair or harmful decisions without accountability.

How Can Companies Ensure Responsible Use of AI?

By developing internal frameworks for oversight, ensuring transparency, running regular audits and involving diverse stakeholders in system design to create comprehensive datasets for better outputs.

What Role Should Humans Play When AI Features in Decision-Making?

Humans should act as informed overseers. That means understanding how the AI works, validating its outputs and stepping in when ethical, legal or contextual judgment is needed. AI can assist, but final accountability must stay human.

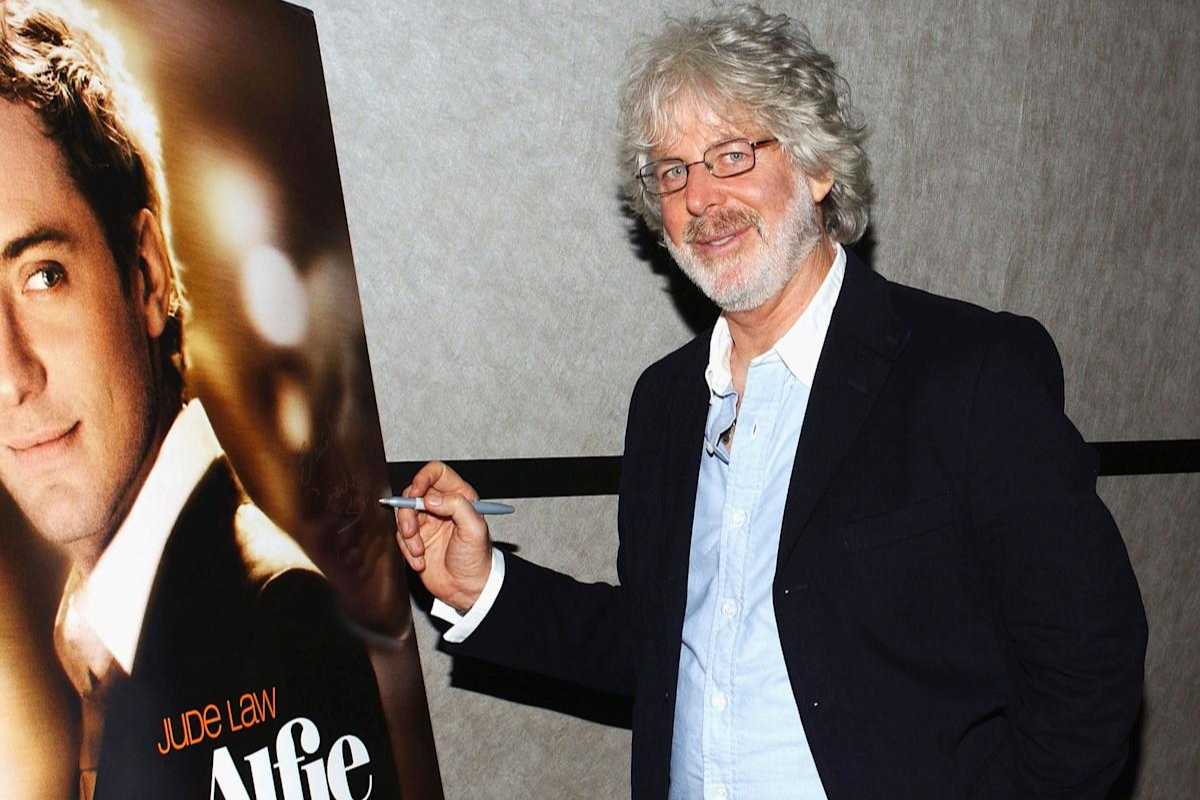

Jack Shaw, a seasoned writer and senior editor of Modded Magazine, harnesses his technological expertise to unravel the complexities of business innovation for a diverse readership. His insights can be seen in publications including Safeopedia, USCCG and Insurance Thought Leadership, guiding industry professionals through the evolving digital landscape.

NEXT: AI in Public Relations: How Brands Can Keep Up in 2025